ChatGPT, developed by OpenAI, has become one of the most popular AI tools since its launch in 2022. Millions of people use it daily for tasks like answering questions, generating content, coding, and more. Its versatility has made it a go-to tool for both personal and professional use. However, with its widespread adoption, questions about its safety, privacy, and security have grown.

Introduction to ChatGPT

ChatGPT is an advanced AI chatbot powered by OpenAI’s large language models (LLMs). It can perform a wide range of tasks, from answering complex questions to helping with creative writing, coding, and even simplifying complex topics. Since its release, it has gained over 180 million users worldwide, with many using it daily for personal and work-related tasks. Its popularity stems from its ability to mimic human-like conversations and provide quick, useful responses.

Safety Features of ChatGPT

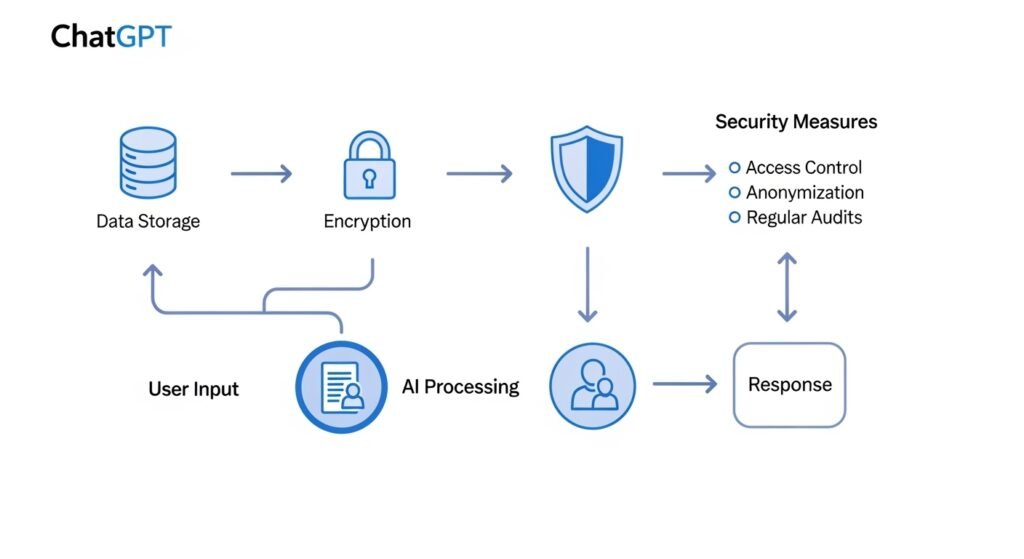

OpenAI has implemented several measures to ensure ChatGPT is secure for users. These safeguards are designed to protect users and maintain the integrity of the platform:

- Content Moderation: ChatGPT uses a combination of algorithms and human reviewers to filter out harmful, offensive, or inappropriate content. This helps prevent the spread of hate speech, misinformation, or explicit material.

- Regular Security Audits: OpenAI conducts frequent security audits to identify and address potential vulnerabilities in ChatGPT’s systems. These audits help ensure the platform remains secure against cyber threats.

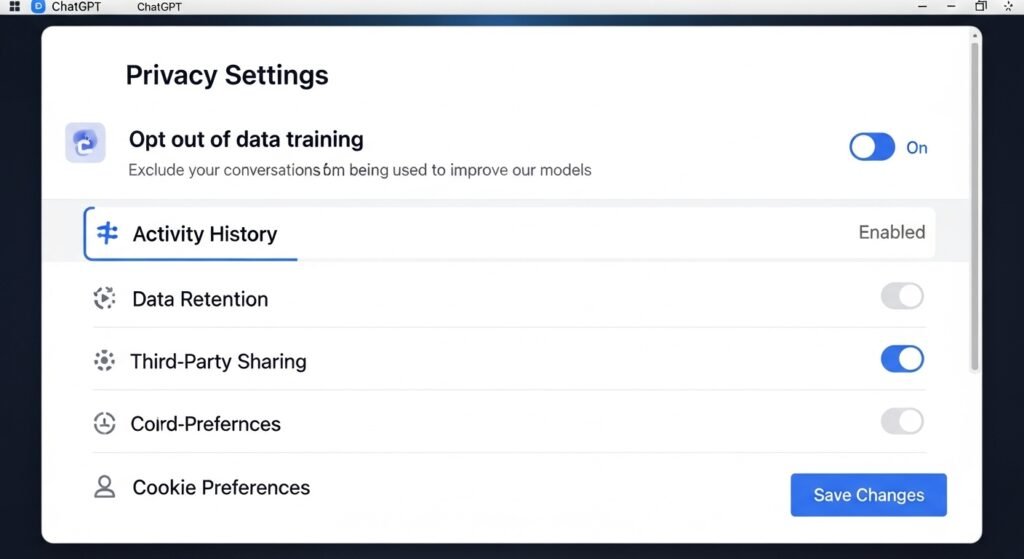

- Privacy Policy: OpenAI’s privacy policy outlines how user data is collected and used. Users can opt out of having their conversations used to train future models, giving them some control over their data.

- Data Encryption: Conversations with ChatGPT are encrypted during transmission, and OpenAI follows industry-standard security practices to protect user information.

Privacy Concerns with ChatGPT

While ChatGPT offers many benefits, there are important privacy considerations to keep in mind:

- Data Collection: When you use ChatGPT, your conversations are stored for 30 days to monitor for abuse, even if you opt out of data training. This means sensitive information could potentially be accessed during this period.

- Non-Confidential Conversations: Your chats with ChatGPT are not private. They may be reviewed by AI trainers to improve the system, raising concerns about the privacy of personal or sensitive information shared during conversations.

- Third-Party Risks: As a third-party service, ChatGPT is vulnerable to data breaches or unauthorized access. For example, in March 2023, a data leak occurred where some users saw others’ conversation histories, highlighting the potential for privacy issues.

Potential Risks of Using ChatGPT

Despite its safety features, ChatGPT is not without risks. Below are some of the key concerns users should be aware of:

- Misinformation: ChatGPT generates responses based on patterns in its training data, which can sometimes lead to inaccurate or biased information. This can be problematic if users rely on ChatGPT without verifying its responses, potentially spreading misinformation.

- Data Breaches: Like any online service, ChatGPT is susceptible to cyberattacks. The March 2023 data leak, where some users’ conversation histories were exposed, serves as a reminder that no system is entirely immune to breaches.

- Misuse for Malicious Purposes: Bad actors can use ChatGPT to create convincing phishing emails, craft deepfake voice scams, or even generate harmful content. In 2025, OpenAI warned that the new ChatGPT Agent feature could potentially aid in dangerous activities, such as bioweapon development, highlighting the risks of misuse.

- Unauthorized Access: Techniques like prompt injection, where malicious actors craft prompts to bypass safety guardrails, can trick ChatGPT into revealing sensitive information. This is a newer category of risk that users should be aware of.

The following table summarizes the key risks and their implications:

| Risk | Description | Impact |

|---|---|---|

| Misinformation | ChatGPT may provide inaccurate or biased responses based on its training data. | Can lead to misinformation if not verified, affecting decision-making. |

| Data Breaches | Vulnerabilities could expose user data, as seen in the 2023 leak incident. | Potential loss of privacy and exposure of sensitive information. |

| Malicious Misuse | Bad actors can use ChatGPT for phishing, scams, or harmful content creation. | Increases the risk of fraud and harm to users or organizations. |

| Prompt Injection | Malicious prompts can bypass safety measures to extract sensitive data. | Compromises user data security and system integrity. |

Best Practices for Safe Use of ChatGPT

To use ChatGPT safely, follow these practical guidelines:

- Do Not Share Sensitive Information: Avoid sharing personally identifiable information (PII), financial details, passwords, confidential business data, or sensitive personal stories. For example, never input credit card numbers or health information.

- Verify Information: Always fact-check ChatGPT’s responses, as they may not always be accurate. Cross-reference with reliable sources, especially for critical tasks.

- Use for General Queries: Stick to using ChatGPT for general information, creative tasks, or brainstorming rather than for sensitive or critical tasks.

- Opt Out of Data Training: If you’re concerned about your data being used to train future models, opt out through your account settings. This can be done via the ChatGPT privacy settings.

- Monitor Your Account: Regularly check your account activity for any suspicious behavior and report issues to OpenAI immediately.

- Stay Informed: Keep up with the latest security updates and features from OpenAI, such as changes introduced with ChatGPT Agent or the upcoming GPT-5 release.

Recent Updates on ChatGPT Safety (2025)

As of August 2025, several developments have impacted ChatGPT’s safety landscape:

- ChatGPT Agent: This new feature, introduced in 2025, allows ChatGPT to perform real-world actions, such as booking appointments or making purchases. While convenient, it has raised significant privacy and security concerns due to its autonomy. Experts warn that this feature could be exploited for malicious purposes if not carefully managed.

- Bioweapon Risks: In July 2025, OpenAI issued a warning that the ChatGPT Agent feature could potentially aid in dangerous activities, such as bioweapon development. This highlights the need for stricter oversight of AI capabilities.

- GPT-5 Release: Scheduled for August 2025, GPT-5 is expected to bring faster responses and improved safety features. This update aims to address some of the current limitations and risks associated with ChatGPT.

- Commercial Changes: New connectors and changes in how ChatGPT operates in the commercial marketplace may affect how businesses use and secure their data with the AI. These changes are particularly relevant for startups and organizations adopting ChatGPT for professional use.

Final Thoughts

AI tools like ChatGPT offer incredible opportunities to simplify tasks and boost productivity. However, they also come with responsibilities. By being proactive about privacy and security, you can enjoy the advantages of ChatGPT while protecting your personal and sensitive information. As we move toward the release of GPT-5 and beyond, staying educated about AI safety will remain essential for all users.