ChatGPT, created by OpenAI, is a powerful AI tool that sparks curiosity about how it works. Many wonder: does it learn from users? This question touches on AI training, privacy, and the future of technology. In this article, we’ll explain how ChatGPT uses user data, address privacy concerns, and answer common questions from users” Our goal is to provide clear, up-to-date information that is useful for all readers.

What Is ChatGPT?

ChatGPT is an AI chatbot that generates human-like text. It’s built on the GPT-3.5 model, a type of large language model (LLM) trained on vast amounts of internet text, like books and websites. This training helps it understand and respond to questions on many topics. OpenAI uses a method called Reinforcement Learning with Human Feedback (RLHF) to make ChatGPT’s responses more accurate and conversational. It’s used for tasks like answering questions, writing essays, or coding.

Does ChatGPT Learn from Users?

Yes, ChatGPT learns from users, but not in real-time. OpenAI uses user interactions to improve future versions of the model, not to change its responses during a conversation. Each chat is independent, so ChatGPT doesn’t “remember” your specific inputs for the next session. Instead, OpenAI collects anonymized data from many users to spot patterns and refine the model’s performance. This process happens through RLHF, where human trainers review data to make responses better over time.

For example, if you ask ChatGPT about a recipe, it uses its pre-trained knowledge to answer. Your question may later help OpenAI improve how ChatGPT handles similar queries, but it won’t affect your next chat directly.

How Does ChatGPT Learn?

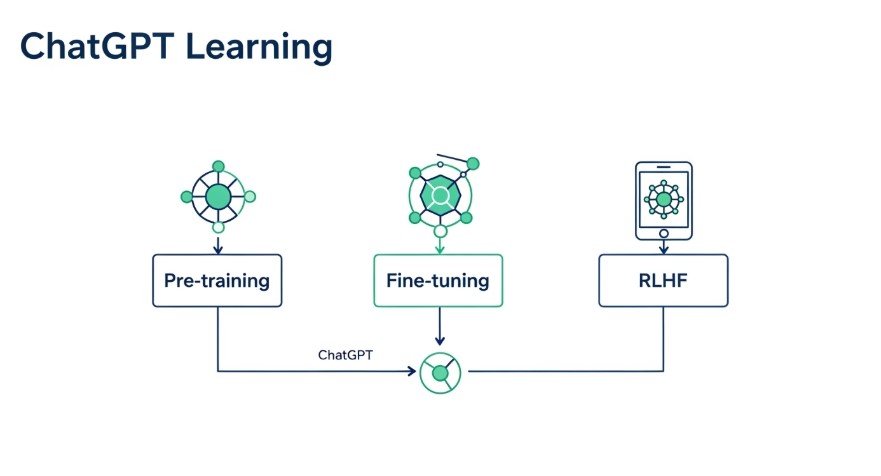

ChatGPT’s learning process has three main steps:

- Pre-training: The model studies a huge dataset of public text to learn language patterns, like predicting the next word in a sentence.

- Fine-tuning: Human trainers simulate conversations, playing both the user and AI, to teach ChatGPT how to respond naturally.

- Reinforcement Learning with Human Feedback (RLHF): Trainers rank responses to guide the model toward better answers. User data helps refine this process over time.

User interactions don’t change ChatGPT instantly but contribute to updates. For instance, OpenAI might notice many users ask about coding and improve those responses in a new version.

Table: ChatGPT’s Learning Stages

| Stage | Description | User Data Role |

|---|---|---|

| Pre-training | Learns from public text to predict words. | None; uses internet data. |

| Fine-tuning | Trainers simulate conversations for natural responses. | Indirect; shapes conversational skills. |

| RLHF | Ranks responses to improve quality. | Indirect; user data refines future updates. |

How Does OpenAI Use User Data?

OpenAI collects user conversations to improve ChatGPT, but it doesn’t store them for real-time learning. Instead, it analyzes aggregated data to find trends. For example:

- If many users ask about recent events, OpenAI might prioritize updating the model’s knowledge.

- Conversations are reviewed by human trainers to spot errors or biases, helping make future versions more accurate.

OpenAI’s privacy policy, updated in 2025, states that user data is anonymized and not used to track individuals. You can opt out of data collection through the OpenAI Privacy Portal. This gives users control over how their chats are used.

Privacy and User Control

Privacy is a big concern with AI. OpenAI takes steps to protect users:

- Anonymized Data: Conversations are stripped of personal details before analysis.

- Opt-Out Option: You can disable chat history or stop your data from being used for training via the Privacy Portal.

- Secure Storage: Data is encrypted and not shared with third parties without consent.

However, users should avoid sharing sensitive information, as OpenAI employees may review chats to improve the system. Always check the OpenAI Privacy Policy for the latest details.

Limitations of ChatGPT’s Learning

ChatGPT has limits that affect how it learns:

- No Real-Time Updates: It doesn’t learn from your chats instantly; updates happen in new model versions.

- Knowledge Cutoff: It has limited info on events after 2021, so verify recent facts.

- Bias Risks: Training data from the internet may include biases, which OpenAI works to reduce.

- Accuracy Issues: ChatGPT can give wrong answers, so always double-check important information.

These limits mean users should use ChatGPT as a tool, not a definitive source.

Common Questions Answered

Does ChatGPT Remember My Conversations?

No, it doesn’t store your chats for future sessions. Each conversation is separate, but aggregated data may improve the model.

Can I Stop ChatGPT from Using My Data?

Yes, opt out through the OpenAI Privacy Portal. You can also turn off chat history in settings.

Is ChatGPT Safe to Use?

It’s safe if you avoid sharing personal details. OpenAI encrypts data and follows laws like GDPR and CCPA.

How Does RLHF Work?

RLHF uses human trainers to rank responses, helping ChatGPT learn what users prefer without real-time changes.

Tips for Using ChatGPT Safely

- Avoid Sensitive Info: Don’t share personal or confidential details.

- Opt Out if Concerned: Use the Privacy Portal to control data usage.

- Verify Answers: Check facts, especially for critical tasks.

- Read Policies: Stay updated on OpenAI’s privacy rules.

Related poss:

- Does ChatGPT Track You? Privacy Risks

- How to Humanize ChatGPT Content

- ChatGPT vs. Traditional Search Engines

Conclusion

ChatGPT learns from users indirectly, using anonymized data to improve future versions through RLHF. It doesn’t remember individual chats, ensuring privacy while allowing model enhancements. Users can opt out of data collection for added control. While powerful, ChatGPT has limits, like potential biases and outdated knowledge, so use it wisely. By understanding how it learns, you can make informed choices about using this AI tool