ChatGPT is a powerful tool for conversations, research, and tasks. But many users notice it slows down as chats get longer. Why does this happen? Is it just your device, or is it ChatGPT’s design? This article dives into the reasons behind ChatGPT’s slowdown, offers simple fixes, and answers common questions to keep your chats fast and efficient. Based on the latest insights as of July 24, 2025, we’ll ensure you get clear, actionable advice.

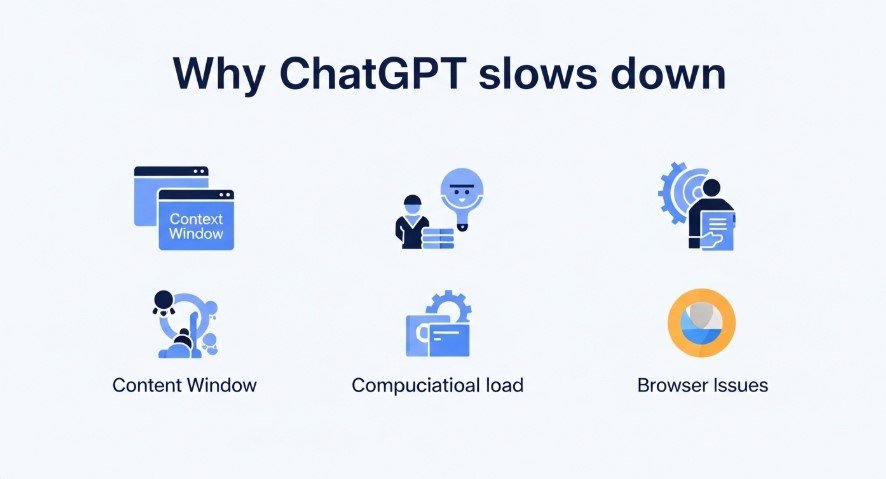

Why Does ChatGPT Slow Down?

ChatGPT’s performance can dip during long conversations due to technical limits, user-side issues, and server factors. Here’s a breakdown of the main causes.

Technical Limits of ChatGPT

ChatGPT processes text using tokens (words, punctuation, etc.). As conversations grow, these tokens pile up, straining the system. Here are the key technical reasons:

- Context Window Size: ChatGPT has a token limit, like 4,096 for GPT-3.5 (about 3,000-4,000 words). Long chats near this limit increase processing time.

- Memory Usage: Storing long chat histories takes more memory, slowing down the system, especially on shared servers.

- Computational Load: Longer chats require ChatGPT to process more context, making responses take longer.

- Token Overhead: Each token adds to the processing burden. Long conversations create a heavy computational load.

User-Side Issues

Your device or setup can also make ChatGPT feel slower:

- Browser Rendering: Long chat threads with text or code can lag in browsers like Chrome or Safari.

- Internet Speed: Slow connections or high latency (ping over 50ms) delay data transfer to OpenAI’s servers.

- Browser Extensions: Ad blockers or script blockers can interfere with ChatGPT’s performance.

Server-Side Factors

OpenAI’s servers can also affect speed:

- High Traffic: During peak hours, heavy user demand slows down responses.

- API Latency: Network delays in API calls add time, especially in long chats.

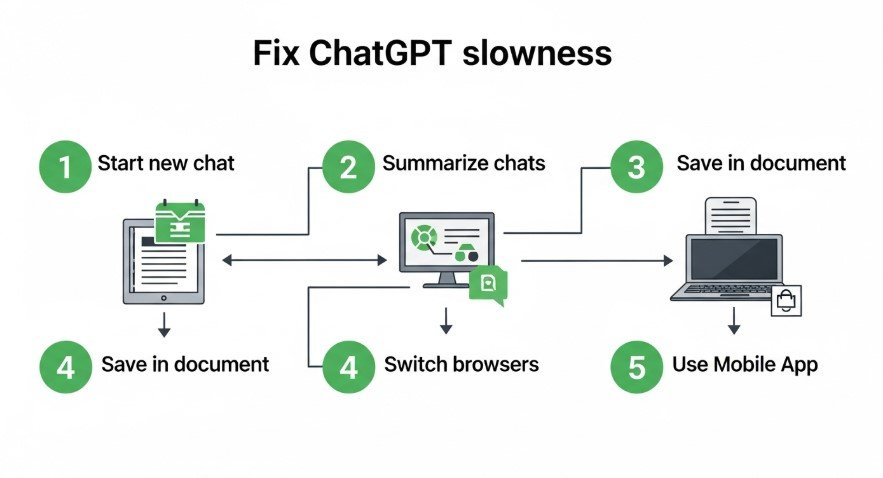

How to Fix ChatGPT Slowness

You don’t have to live with slow responses. Here are practical, tested solutions to speed up ChatGPT during long conversations.

Top Fixes for Faster Chats

- Start a New Chat: Reset the token load by starting a fresh chat. This clears the history and boosts speed.

- Summarize Past Chats: Copy key points from a long chat and paste them into a new one to keep context without overloading.

- Save Chats in a Document: Store conversations in a .txt or .docx file for reference. Upload or summarize them in a new chat.

- Switch Browsers: If Chrome lags, try Firefox or Edge. Some browsers handle long threads better.

- Use the Mobile App: The ChatGPT app often performs better than browsers for long chats.

Extra Tips for Better Performance

- Clear Browser Cache: Old cache data can slow things down. Clear it regularly.

- Check Server Status: Visit OpenAI’s Status Page to see if servers are overloaded.

- Disable Extensions: Turn off browser extensions or use incognito mode to avoid interference.

- Improve Internet: Use a wired connection or upgrade to fiber optic for lower latency.

| Solution | Benefit | Best For |

|---|---|---|

| Start New Chat | Resets token load | New topics |

| Summarize Chats | Keeps context, reduces tokens | Ongoing projects |

| Save in Document | Preserves history | Long-term use |

| Switch Browsers | Fixes rendering issues | Browser lag |

| Use Mobile App | Better performance | Frequent chats |

Common Questions Answered

Here are answers to questions from Google’s “People Also Ask” and “Related Searches” to address what readers want to know:

Why does ChatGPT slow down in long chats?

It’s due to token limits, memory usage, browser rendering, and server load.

How can I make ChatGPT faster?

Start new chats, summarize history, or use the mobile app. Clear cache and check internet speed.

Can I keep chat history without slowing down?

Yes, save chats in a document or summarize key points for a new chat.

Does ChatGPT Plus fix slowness?

It may help with priority access, but long chats still face token limits.

Why is the mobile app faster?

It’s optimized for smaller data loads and avoids browser rendering issues.

Are there better alternatives?

Models like Google’s Gemini may respond faster for some tasks due to different context handling.

What’s Next for ChatGPT?

OpenAI is likely working on better memory management and larger context windows. For now, no official fix exists for long-chat slowness, but the workarounds above are effective. Monitor OpenAI’s Status Page for updates on server performance or new features.

Explore more posts:

- How to Increase ChatGPT Message Limits

- ChatGPT Mac App vs Website: Which Performs Better?

- How to Humanize ChatGPT Content

Conclusion

ChatGPT can slow down in long conversations due to token limits, memory strain, browser issues, and server load. But you can keep chats fast by starting new threads, summarizing history, saving chats in documents, switching browsers, or using the mobile app. These solutions, backed by user reports and technical insights, ensure a smooth experience. Try them today and check OpenAI’s Status Page for any server updates to stay ahead.