Artificial intelligence is reshaping how we interact with technology. ChatGPT, created by OpenAI, generates human-like responses for tasks like answering questions or writing text. However, it has limitations. Its knowledge is fixed to its training data, often outdated by 2025, and it can produce incorrect answers, known as hallucinations. Retrieval-Augmented Generation (RAG) is a technique that solves these issues by combining external information retrieval with ChatGPT’s text generation. This guide explains how RAG enhances ChatGPT prompts, its benefits, implementation steps, and real-world applications.

What is Retrieval-Augmented Generation (RAG)?

RAG is a method that pairs information retrieval with text generation. It allows ChatGPT to access external knowledge bases, like documents or databases, to provide more accurate and relevant answers. Introduced by Meta AI in 2020, RAG retrieves relevant data and uses it to inform ChatGPT’s responses, reducing reliance on static training data.

How RAG Works

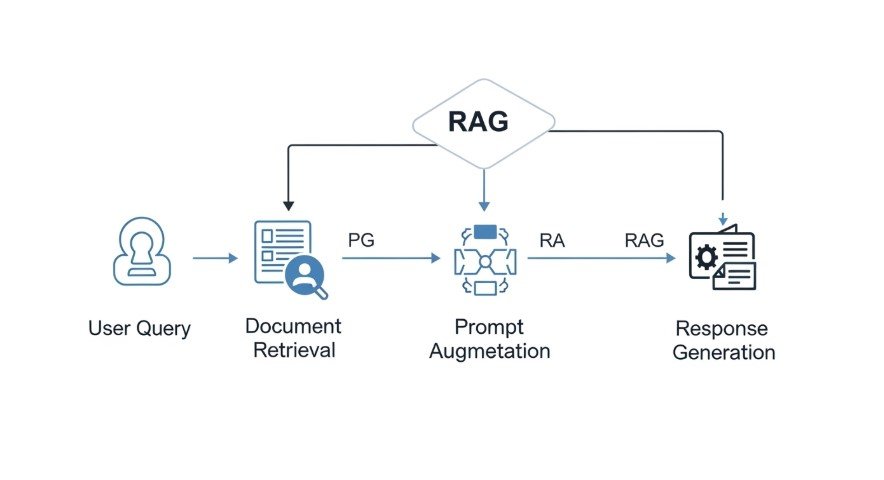

RAG operates in four steps:

- User Query: A user asks a question, like “What are the latest AI trends in 2025?”

- Retrieval: The system searches a knowledge base (e.g., recent articles) and fetches relevant documents.

- Prompt Augmentation: The retrieved data is added to the user’s prompt.

- Response Generation: ChatGPT uses the augmented prompt to generate a precise answer.

Why Combine RAG with ChatGPT Prompts?

Combining RAG with ChatGPT prompts improves the AI’s performance in several ways:

- Reduces Errors: RAG fetches factual data, minimizing incorrect or made-up answers.

- Accesses Current Information: It pulls recent data, overcoming ChatGPT’s fixed knowledge cutoff.

- Improves Relevance: RAG tailors responses to specific domains, like medicine or law.

- Enables Updates: Knowledge bases can be updated without retraining ChatGPT.

For example, a user asking about 2025 AI regulations would get outdated answers from ChatGPT alone. With RAG, the system retrieves the latest regulatory documents, ensuring accurate responses.

Real-World Example

A company uses a RAG-enhanced ChatGPT chatbot for customer support. When a customer asks about a product’s features, RAG retrieves the latest product manual, ensuring the response is accurate and current.

Table: RAG vs. ChatGPT Alone

| Feature | ChatGPT Alone | RAG + ChatGPT |

|---|---|---|

| Knowledge Source | Fixed training data | External, updatable sources |

| Error Rate | Higher (hallucinations) | Lower (fact-based) |

| Relevance | General responses | Domain-specific answers |

| Updates | Requires retraining | Dynamic updates |

How to Implement RAG with ChatGPT Prompts

Setting up RAG with ChatGPT requires technical steps but is made easier with tools like LangChain or LlamaIndex. Here’s how to do it:

- Select a Knowledge Base: Choose relevant documents, like company records or recent articles.

- Index the Data: Use a vector database (e.g., FAISS) to organize documents for fast retrieval.

- Retrieve Information: When a user asks a question, the system finds relevant documents using vector similarity search.

- Augment the Prompt: Combine the retrieved data with the user’s question to create a new prompt.

- Generate the Response: Feed the augmented prompt to ChatGPT for a precise answer.

Tools to Use

- LangChain: Simplifies RAG setup by handling retrieval and prompt integration. Learn more

- LlamaIndex: Connects ChatGPT to external data sources efficiently. Learn more

- FAISS: A vector database for fast document retrieval.

Best Practices for RAG-Enhanced Prompts

Crafting effective prompts is critical for RAG success. Follow these tips:

- Be Clear: Specify the knowledge source, e.g., “Use the latest product manual to answer.”

- Add Context: Include details about the domain or task to guide ChatGPT.

- Use Simple Language: Write prompts as if asking a human for natural responses.

- Test Prompts: Experiment with different prompts to find the most effective ones.

Example Prompts

Effective Prompt:

“Using the 2025 AI regulation documents, summarize key compliance requirements.”

Ineffective Prompt:

“What are AI regulations?” (Too vague, lacks source specification.)

For more on prompt engineering, see our post on how to humanize ChatGPT content.

Real-World Applications

Customer Support

A retail company uses RAG with ChatGPT to answer customer queries. When asked about return policies, the chatbot retrieves the latest policy document, reducing errors by 25% compared to ChatGPT alone.

Research Assistance

A scientist uses RAG to query recent studies. By retrieving papers from a database, ChatGPT provides accurate summaries, saving hours of manual research.

Learn how ChatGPT aids research in our post on using ChatGPT for UX research plans.

Challenges and Solutions

- Challenge: Low-quality retrieval can lead to irrelevant answers.

Solution: Use advanced retrieval methods like hybrid search or reranking. - Challenge: Complex prompts may confuse ChatGPT.

Solution: Simplify prompts and test iteratively. - Challenge: Data privacy concerns with sensitive information.

Solution: Encrypt knowledge bases and limit access.

Conclusion

Combining RAG with ChatGPT prompts creates a powerful tool for accurate, relevant, and up-to-date AI responses. By addressing ChatGPT’s limitations, RAG enhances its use in customer support, research, and more. With tools like LangChain and proper prompt engineering, anyone can implement RAG in 2025. Start experimenting to unlock AI’s full potential.

FAQs

What is RAG?

RAG combines information retrieval with text generation to improve AI accuracy.

How does RAG improve ChatGPT?

It reduces errors and provides current, domain-specific information.

What tools support RAG implementation?

LangChain, LlamaIndex, and FAISS are popular choices.

Can RAG work with sensitive data?

Yes, with proper encryption and access controls.

How do I write RAG prompts?

Be clear, add context, use simple language, and test variations.